NVidia CEO Spills The Beans On The Future Of GeForce GPUs

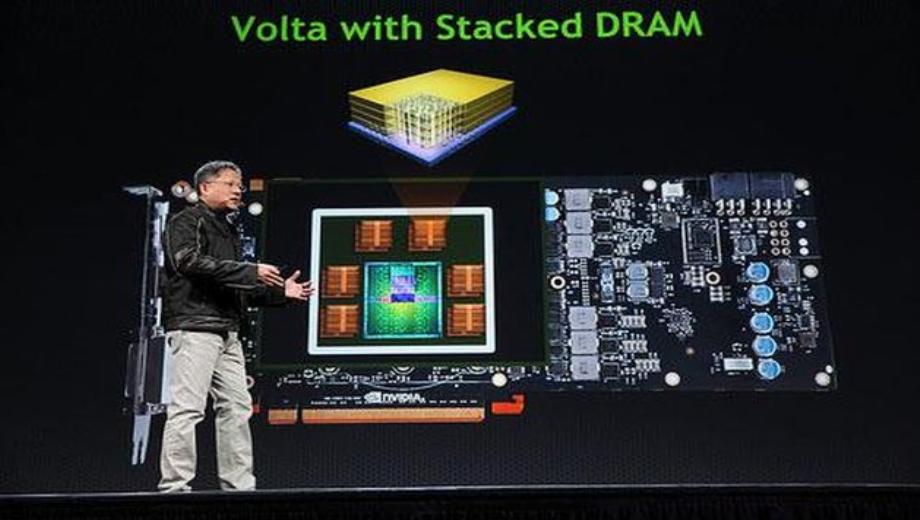

During his keynote at Nvidia’s GPU Technology Conference, the company’s CEO Jen-Hsun Huang shared a handful of details about the upgrades planned for their next two generations of graphics chips.

Following NVidia’s current Kepler graphics chip will be “Maxwell” which will be released early in 2014. The main draw of Maxwell is the introduction of “unified virtual memory” which allows the GPU to see the contents of the system RAM and vice versa. This would make programming the GPU much easier, especially in GPGPU applications.

Following Maxwell will be “Volta.” Volta will have its graphics memory moved to the GPU silicon. The on-chip memory will also be stacked vertically. Together, these design decisions allow the memory bandwidth to increase substantially over current modern architecture. According to NVidia’s CEO, Volta’s integrated memory will boast a whopping 1 TB/s, which is more than three times the memory bandwidth of NVidia’s $1,000 Titan.

Jen-Hsun Huang didn’t pin down Volta’s release window, but we don’t expect it to be released before 2016. NVidia has a tradition of releasing new architectures every two years whereas Fermi was released in 2010, Kepler was released in 2012 and Maxwell is scheduled for release in 2014.

![theHunter: Call of the Wild v3117436 (+17 Trainer) [iNvIcTUs oRCuS]](https://9588947a.delivery.rocketcdn.me/wp-content/uploads/2024/01/thehunter-call-of-the-wild-v1.19-scr-01_0-464x276.jpg)

![Final Fantasy VII Rebirth v1.0+ (+58 Trainer) [FLiNG] – Update November 2025](https://9588947a.delivery.rocketcdn.me/wp-content/uploads/2024/02/final-fantasy-vii-rebirth-1-464x276.jpg)